The purpose

Run Stable Diffusion from the command line using stable-diffusion.cpp. It can be executed on both AMD GPUs and CPUs.

Build environment

stable-diffusion.cpp

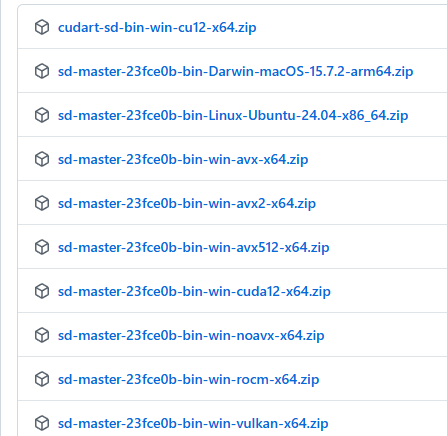

Download the ZIP file that matches your environment from the following page.

If you want to run it on an AMD GPU, look for versions labeled Vulkan or ROCm.

(Generally, Vulkan should be fine. ROCm tends to support a more limited range of GPUs.)

For NVIDIA GPUs, look for versions labeled “CUDA“.

The AVX512, AVX2, AVX, and No-AVX versions are for CPU operation. Please check which AVX version your CPU supports before downloading. (I was under the impression they weren’t, but it turns out AMD CPUs also support AVX. The easiest way to check your specific version is to ask an AI.)

Once you have extracted the downloaded file to a folder of your choice, the setup is complete.

Model

If you don’t have the Stable Diffusion model locally, please refer to the following page to download it. (You can save the model anywhere you like.)

Execute

Open the command line and navigate to the folder where you extracted stable-diffusion.cpp.

Run the following command. (Replace “model path” with the actual path of the model you are using.)

sd-cli -m model_path -p "a lovely cat" -s -1

It is successful if a cat image is generated in ./output.png.

Option (Argument)

The options are summarized on the following page.

Only the most commonly used ones are listed below.

| -m | path for Model |

| -p | prompt |

| -s | Seed To generate a random image, specify -1. Note that if this is not specified, the same image will be generated every time. |

-H | Image height |

| -W | Image width |

--vae | path for VAE |

--steps | Step default 20 Be careful, as some models perform better with lower values. |

Verification

Confirmed that it works with the following models.

- bluePencilXL_v700.safetensors

- v1-5-pruned-emaonly.safetensors

Execute time

Image generation speeds are as follows. (This excludes model loading time and post-iteration processing.)

| Creation Time(s) | |

| CPU(StableDiffusionWebui) | 263 |

| GPU(StableDiffusionWebui) | 63 |

| CPU(stable-diffusion.cpp AVX2) | 249 |

| GPU(stable-diffusion.cpp Vulkan) | 36 |

Reference

comment