The purpose

I will use Google’s Gemini API with NodeJS to perform music generation. The implementation will be frontend-only, with Google acting as the backend. It is necessary to issue a Google API key.

Note: At the time of writing this article, Gemini’s music generation is a preview feature. Specifications and other details may change in the future.

This article demonstrates a client-side implementation using Node.js. However, this method is not recommended by Google because it risks exposing your API key to users.

Therefore, please limit its use to personal projects or experiments.

This article is primarily based on the following page. However, the JavaScript example on that page was incorrect and did not work at the time this article was written.

License for Generated Music

My understanding is that the creator holds the copyright for the generated music and can use it under their own responsibility.

The source is the following page. Please be sure to check for the latest information before using it.

Prepare

Generate API key

Access the following page to obtain an API key.

Create project

First, create a folder for your project.

Then, open a command prompt in that folder and run the following command to create a Vite project.

npm init vite@latestAt the time of writing this article, the Vite version was 6.3.5.

If you want to match the settings, please specify that version.

You will be prompted to enter a name for your project.

(For this article, the name used is music.)

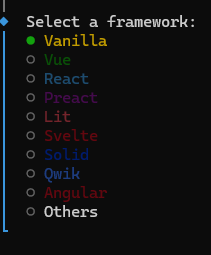

When prompted for the framework to use, select Vanilla.

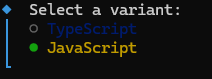

Next, select JavaScript for the language.

This completes the project creation.

Install library

After the project has been created, you will install the necessary libraries.

cd Gemini

npm install @google/genai

npm installThat’s it, the setup is complete.

Code modification

modification for example

As noted above, the example on the official page does not work. The corrected code is as follows. Please change the apiKey to the one you have issued. (To make it work, you need to load it from an HTML file.)

In the line weightedPrompts: [{ text: 'piano solo, slow', weight: 1.0 }],, 'piano solo, slow' is the prompt. Feel free to change it to whatever you like.

However, while musicGenerationConfig is for settings, changing it did not seem to affect the generated music. I was unable to determine if it was not working at all or if my code was incorrect.

Additional Notes:

It appears that the musicGenerationConfig settings are correct. I’m unsure if the BPM setting works, but onlyBassAndDrums seemed to function properly. It also appears that the prompt takes precedence over these settings, which might be due to the weight.

An important note: The official documentation states that the function to update the session is session.reset_context(), but this is incorrect. The correct function is session.resetContext().

import { GoogleGenAI } from '@google/genai';

const ai = new GoogleGenAI({

apiKey: "API Key", // Do not store your API client-side!

apiVersion: 'v1alpha',

});

const session = await ai.live.music.connect({

model: 'models/lyria-realtime-exp',

callbacks: {

onmessage: async (e) => {

console.log(e)

},

onerror: (error) => {

console.error('music session error:', error);

},

onclose: () => {

console.log('Lyria RealTime stream closed.');

}

}

});

await session.setWeightedPrompts({

weightedPrompts: [{ text: 'piano solo, slow', weight: 1.0 }],

});

await session.setMusicGenerationConfig({

musicGenerationConfig: {

bpm: 200,

temperature: 1.0

},

});

await session.play();

Usage of Generated Music

The generated music is returned in the following callback.

onmessage: async (e) => {

console.log(e)

},I was able to get the music to play by referencing the following page.

However, since I was unsure of the licensing terms for the code I referenced, I will refrain from including the code.

The process is as follows:

- Convert

e.serverContent.audioChunks[0].data(base64) into binary (int16) data. - Convert the binary (int16) data into float32, then create an AudioBuffer.

- Set the created AudioBuffer to an

AudioBufferSourceNodeand play the audio.

Trouble shooting

Here are some of the issues I encountered:

- The music created in a single event is short (1-2 seconds), so you need to receive multiple events to create a track of a decent length.

- The first event received by

onmessageis aSetupevent and does not contain any music data. - The music generation continues indefinitely without an end, so there is no natural stop to the song.

- The music will not play without user interaction. To solve this, I made a button appear when generation was complete, and the music played when the user clicked the button.

- You may encounter Vite build errors or errors in older browsers. This is likely caused by the use of top-level

await. To fix the build errors, you can either modify the code or change the settings. To make it work in older browsers, a code modification is necessary.

Result

We were able to use the Gemini API to generate music.

Reference

comment